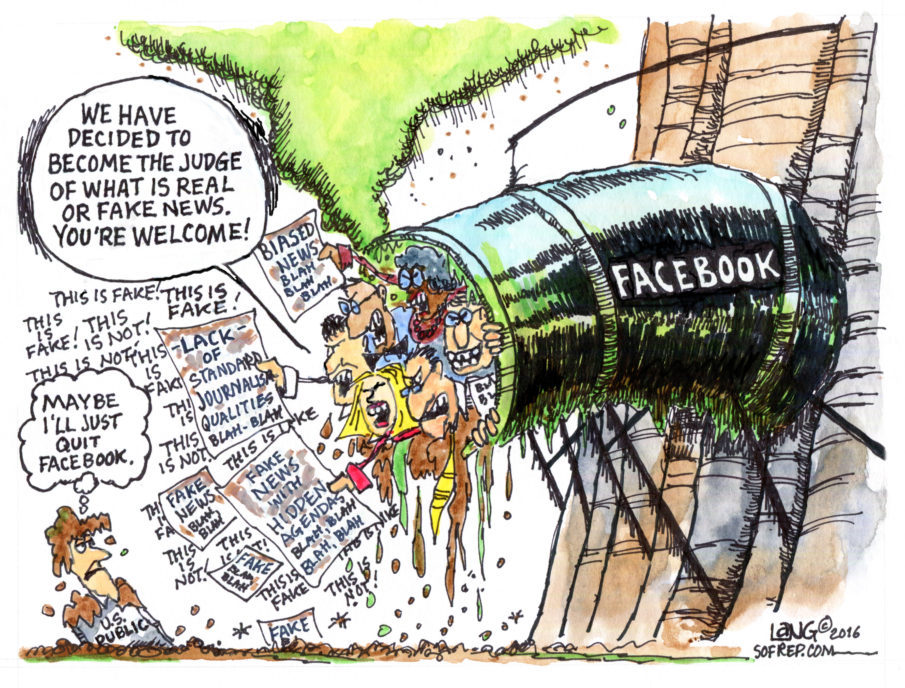

Facebook announced a series of new features they would be introducing to the social media site in an effort to combat the distribution of fake news stories. These features come after Facebook and other internet giants like Google received criticism in the media for permitting the spread of false new stories that some claim turned the tide of the recent presidential election in Donald Trump’s favor.

According to a statement made by the social media powerhouse, these new changes will make it easier for users to flag hoax news stories they find in their news feeds. Plans to work with third party sites in order to better determine the credibility of content were also announced, citing well known websites such as Snopes.com, ABC News and the Associated Press as likely partners.

Articles that have been flagged and then assessed to be inaccurate will receive a “disputed” tag and may appear lower in the news feeds of other users. They will then pair the article with a link describing the reasons the content has been deemed false. Facebook stated that once a link has been flagged as “disputed” it can no longer be promoted through Facebook’s paid promotions algorithm, dramatically reducing its potential social media reach.

“We believe in giving people a voice and that we cannot become arbiters of truth ourselves, so we’re approaching this problem carefully,” Facebook leadership said via a news release.

Currently, forty-three organizations have signed up to serve as third-party fact checkers to help assess the credibility of flagged news stories. Each of these organizations have been asked to sign a “code of principles” that requires political objectivity, transparency of sources, and a commitment to open and honest assessments of the content that is flagged.

While many on the left fault Facebook and other sites for allowing fake news to affect the voting populous, Facebook came under fire earlier in the year for intentionally censoring or suppressing real news stories in an effort to stifle conservative politics on the platform. Facebook denied any political bias in their methodology, but switched to an algorithm based trending news system rather than having individual, and potentially biased, human beings choose which stories to feature on the right hand side of user’s dashboards.

“We take allegations of bias very seriously. Facebook is a platform for people and perspectives from across the political spectrum. Trending Topics shows you the popular topics and hashtags that are being talked about on Facebook,” the company told USA Today at the time. Their choice to do away with human editors, though, could be seen as an admission that it is feasible for people to allow their biases to effect how they assess new stories as valid and worthy of public attention. Facebook’s new strategy calls for bringing the human element back into the system, but outsourcing that element to third party sites so the platform itself can avoid claiming responsibility for the determinations made about the credibility of stories and topics.

At risk of sounding alarmist, these new tools may not usher in a new golden age in news. These tools and others like them could easily be abused by large news distributors like the aforementioned ABC. All major news stories are currently funneled through a handful of large distributors in the United States. These distributors are often collectively referred to as “the main stream media” and they are no longer seen as the noble trenchcoat wearing journalists we imagine from yesteryear.

ABC News is owned by Disney, whose CEO publicly campaigned for Hillary Clinton. CNN is also owned by a large company (Time Warner) that donated millions to the Clinton campaign. On the opposite end of the spectrum, Fox News can hardly be considered objective and without bias either – leaving this writer for a small, internet based news site to wonder, by who’s standard are we to be graded? If the content SOFREP’s team of writers produce isn’t in keeping with the mainstream perspective, will that earn us the ire of Facebook’s new filtering method? Should we expect to be assessed objectively by third party sites that pay their bills by competing for clicks just like we do? Asking ABC, CNN or any other news site that runs off of advertising revenue to determine the credibility of considerably smaller sites in the eyes of social media users is akin to asking Microsoft to tell us which tech company can give us the best software.

I’m not suggesting that nothing should be done. Facebook’s new method clearly needs some refining, and in time, it might be extremely effective in rooting out some of the click-bait garbage we all hate seeing in our News feeds. It just seems worth noting that the current plan is effectively going back to the old one: having people decide what news is real and how far it can spread.

And anytime we hand that kind of power to anyone, I’m going to be critical.

Already have an account? Sign In

Two ways to continue to read this article.

Subscribe

$1.99

every 4 weeks

- Unlimited access to all articles

- Support independent journalism

- Ad-free reading experience

Subscribe Now

Recurring Monthly. Cancel Anytime.

COMMENTS