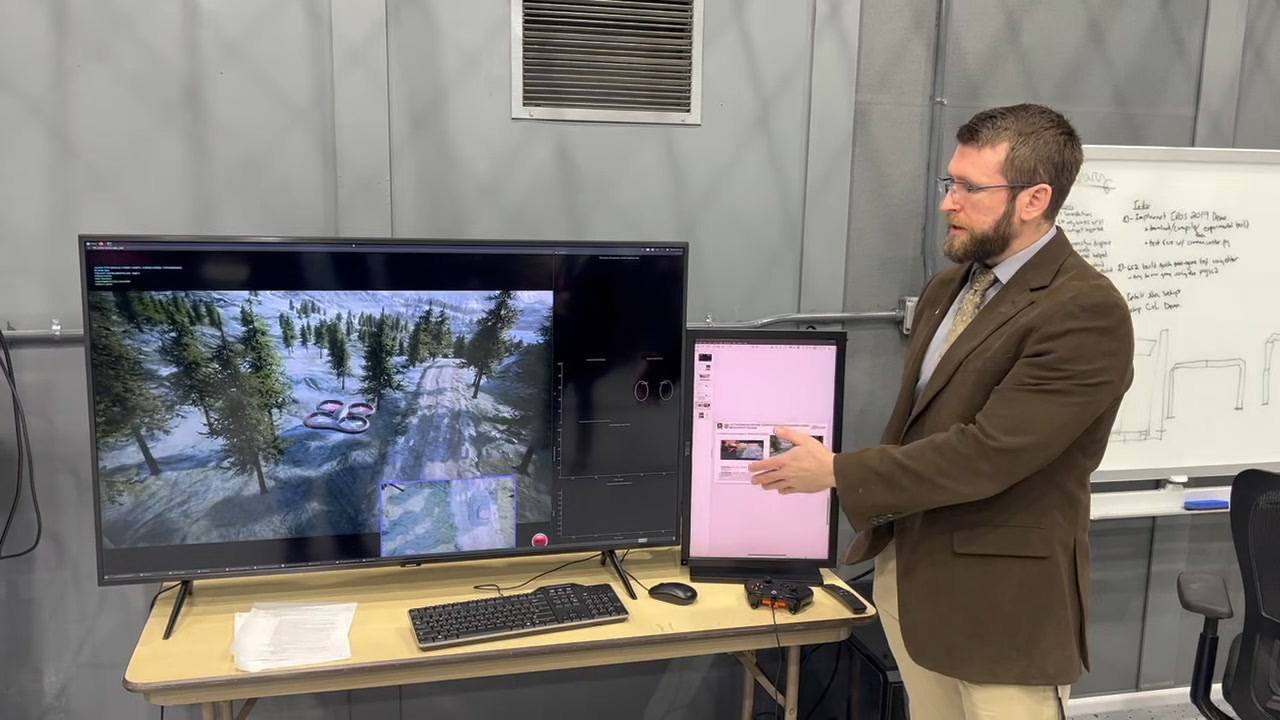

For instance, the drone might receive commands from a soldier regarding images its cameras pick up from beneath its purview, and instantly learn both the action itself and the images it receives, making them part of its existing “library” such that it can perform the tasks autonomously moving forward.

“We have tools to allow humans and AI to work together,” Waytowich said.

Warfare in the future is likely to involve a dangerous and unpredictable mixture of air-sea-land-space-cyber weapons, strategies and methods of attack, creating a complex interwoven picture of variables likely to confuse even the most elite commanders.

This anticipated “mix” is a key reason why futurists and weapons developers are working to quickly evolve cutting edge applications of AI, so that vast and seemingly incomprehensible pools of data from disparate sources can be gathered, organized, analyzed and transmitted in real time to human decision makers.

In this respect, advanced algorithms can increasingly bounce incoming sensor and battlefield information off of a seemingly limitless database to draw comparisons, solve problems and make critical, time sensitive decisions for human commanders in war. Many procedural tasks, such as finding moments of combat relevance amid hours of video feeds or ISR data, can be performed exponentially faster by AI-enabled computers. At the same time, there are certainly many traits, abilities and characteristics unique to human cognition and less able to be replicated or performed by machines. This apparent dichotomy is perhaps why the Pentagon and the military services are fast pursuing an integrated approach combining human faculties with advanced AI-enabled computer algorithms.

Human-machine interface, manned-unmanned teaming and AI-enabled “machine learning” are all terms referring to a series of cutting edge emerging technologies already redefining the future of warfare and introducing new tactics and concepts of operation.

Just how can a mathematically-oriented machine using advanced computer algorithms truly learn things? What about more subjective variables less digestible or analyzable to machines such as feeling, intuition or certain elements of human decision-making faculties. Can a machine integrate a wide range of otherwise disconnected variables and analyze them in relation to one another?

A drone without labeled data is referred to by ARL scientists as unsupervised learning, meaning it may not be able to “know” or contextualize what it is looking at. In effect, the data itself needs to be “tagged,” “labeled” and “identified” for the machine such that it can quickly integrate into its database as a point of reference for comparison and analysis.

“If you want AI to learn the difference between cats and dogs, you have to show it images…but I also need to tell it which images are cats and which images are dogs, so I have to “tag” that for the AI,” Waytowich told Warrior.

As rapid advances in AI continue to reshape thinking about the future of warfare, some may raise the question as to whether there are limits to its capacity when compared to the still somewhat mysterious and highly capable human brain.

ARL scientists continue to explore this question, pointing out that the limits or possibilities of AI are still only beginning to emerge and are expected to yield new, currently unanticipated breakthroughs in coming years. Loosely speaking, the fundamental structure of how AI operates is analogous to the biological processing associated with the vision nerves of mammals.

The processes through which signals and electrical impulses are transmitted through the brain of mammals conceptually mirror or align with how AI-operates, senior ARL scientists explain. This means that a fundamental interpretive paradigm can be established, but also that scientists are now only beginning to scratch the surface of possibility when it comes to the kinds of performance characteristics, nuances and phenomena AI-might be able to replicate or even exceed.

For instance, could an advanced AI-capable computer have an ability to distinguish a dance “ball” from a soccer “ball” in a sentence by analyzing the surrounding words and determining context? This is precisely the kind of task AI-is increasingly being developed to perform, essentially developing an ability to identify, organize and “integrate” new incoming data not previously associated with its database in an exact way.

Waytowich further told Warrior how humans can essentially “interact” with the machines by offering timely input of great relevance to computerized decision-making, a dynamic which enables fast “machine-learning” and helps “tag” data.

“If you have millions and millions of data samples, well, you need a lot of effort to label that. So that is one of the reasons why that type of solution training AI doesn’t scale the best, right? Because you need to spend a lot of human effort to label that data. Here, we’re taking a different approach where we’re trying to reduce the amount of data that it needs because we’re not trying to learn everything beforehand. We’re trying to get it to learn tasks that we want to do on the fly through just interacting with us,” Waytowich said.

Building upon this premise, many industry and military developers are looking at ways through which AI-enabled machines can help perceive, understand and organize more subjective phenomena such as intuition, personality, temperament, reasoning, speech and other factors which inform human decision making.

Clearly the somewhat ineffable mix of variables informing human thought and decision-making is likely quite difficult to replicate, yet perhaps by recognizing speech patterns, behavior from history or other influencers in relation to one another, perhaps machines can somehow calculate, approximate or at least shed some light upon seemingly subjective cognitive processes. Are there ways machines can learn to “tag” data autonomously?

That is precisely what seems to be the point of the ARL initiatives, as their discoveries related to machine learning could lead to future warfare scenarios wherein autonomous weaponized platforms are able to respond quickly and adjust effectively in real time to unanticipated developments with precision.

“If there’s a new task that you want, we want the AI to be able to know, and understand what it needs to do in these new situations. But you know, AI isn’t quite there yet. Right? It’s brittle, it requires a lot of data…..and most of the time requires a team of engineers and computer scientists somewhere behind the scene, making sure it doesn’t fail. What we want is to push that to where we can adapt it on the edge and just have the soldier be able to adapt that AI in the field,” Waytowich said.

COMMENTS