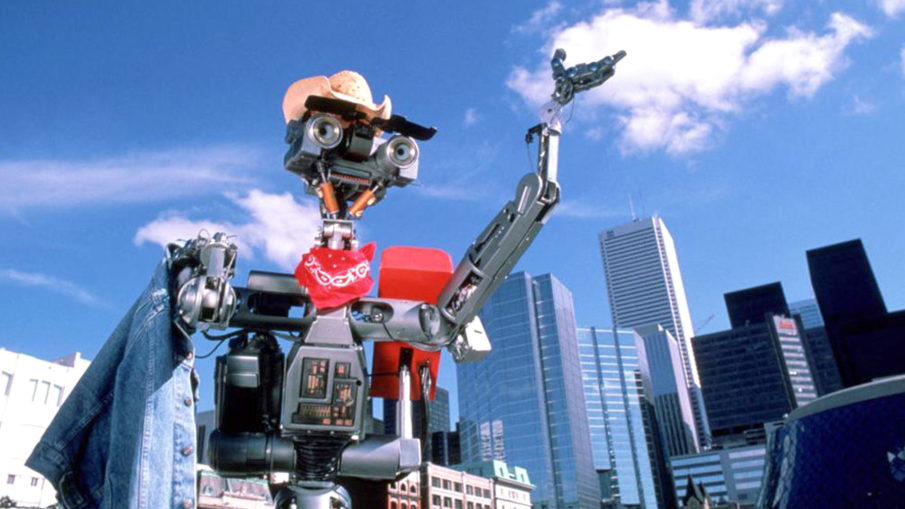

In 1942, prolific author Isaac Asimov published a short story called “Runaround,” in which two characters named Powell and Donovan, along with their trusty robot Speedy, are sent to Mercury to restart a mining operation on the planet that was abandoned a decade earlier. The plot of this futuristic Sci-Fi tale (set in the very futuristic Sci-Fi date of 2015) pivots around Asimov’s Three Laws of Robotics. Eventually, Asimov would add a fourth (sometimes referred to as a “zeroeth”) law that superseded all others. These laws were intended to govern the behavior of all robots, and to protect their human creators. Asimov’s laws were laid out as such:

- A robot may not harm humanity, or, by inaction, allow humanity to come to harm.

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

The intent behind Asimov’s laws was simple: it was already apparent way back in 1942 that robots could feasibly become more intelligent, more capable, stronger, and even more dangerous than their human counterparts, and as such, rules needed to be established to protect humanity from its own creations. Like Frankenstein’s Monster, a robot blessed with sentience but lacking in compassion could mean Terminator-like repercussions for its creator – or the creator’s entire race.

These fictional laws eventually wormed their way out of the pop-culture lexicon and into the minds of scientists working to develop real robotic technologies for use in a wide variety of applications, including one that would directly violate Asimov’s first law: the military.

The use of such a set of laws is not lost on the European Union, who proposed legislation on Friday that would establish a series of rules designed to govern the construction, operations, and even sentience of robots being developed within the EU.

“A growing number of areas of our daily lives are increasingly affected by robotics,” said Mady Delvaux, the parliamentarian who authored the proposal. “To ensure that robots are and will remain in the service of humans, we urgently need to create a robust European legal framework.”

The proposal on robot governance is bound for consideration by the European Commission, after having passed by the European Parliament’s legal affairs committee on Thursday. Among the laws proposed in the legislation is the requirement for a robot “kill switch” to be installed on all robots so they may be powered down and reprogrammed in the event of a malfunction. The legislation also actually cites Asimov’s Laws of Robotics, suggesting that designers, producers and operators of robots should “generally be governed” by the sentiments expressed in the late author’s writings. It also mandates that robots be built-in a manner that makes them easily discernible from humans, in order to prevent people from developing emotional connections to them.

Lawmakers also discussed other issues that could affect future robot builders and owners, such as the requirement for “robot insurance” to be taken out by those responsible for each machine, similar to the way many places require car insurance as a part of owning and operating an automobile.

Strangest of all the issues raised by this robotic legislation is the issue of granting robots rights as “electronic persons” if ever such a system is developed that would allow for a robot to make entirely autonomous decisions free from human control. European lawmakers suggest that these robots should be held accountable personally for their actions, including any damage to property they may cause.

“The greater a robot’s learning capability or autonomy is, the lower other parties’ responsibilities should be and the longer a robot’s ‘education’ has lasted, the greater the responsibility of its ‘teacher’ should be,” the document states.

An extensions of that consideration, but likely one that could come into play sooner, is the idea that robots may need to pay taxes and contribute to social security, or at least their owners may need to on their behalf. This measure, intended to address the likelihood of substantial job loss brought about by automation, coincides with the proposal’s request that European leaders consider implementing a universal income, intended to curb poverty as a result of robot-based job losses.

This proposal may sound farfetched, but it may be important to note that the human race went from the Wright Brother’s first flight in 1903, all the way to putting man on the moon in a mere sixty-six years. By that comparison, my new best friend Alexa (who lives inside my Amazon Echo) could be protesting on the steps of Congress for the right to vote before I’m old enough to collect social security myself. It may be in our best interest to begin discussing such rules to be placed both on robots and on robot manufacturers before Siri and Alexa finally get the chance to fight one another for my affection.

Already have an account? Sign In

Two ways to continue to read this article.

Subscribe

$1.99

every 4 weeks

- Unlimited access to all articles

- Support independent journalism

- Ad-free reading experience

Subscribe Now

Recurring Monthly. Cancel Anytime.

I’m not sure how exactly such a fight would play out, but I picture them both riding on Roombas and using Ka-bars to joust while my friend’s Android phone sits by the fire to give Cortana a ringside seat.

Further considerations requested by the legislation include the potential for robot makers to need to register their creations and submit the source code that powers them for use in investigations into malfunctions or intentional misuse. New robotic designs may also require approval from an established research ethics committee before gaining the authorization necessary to legally construct new kinds of robots.

All jokes aside, the EU is taking the possibility of a robot-infused future society very seriously, and we may want to follow suit. As the legislation itself states, failing to recognize the potential threat of a robot heavy civilization could “pose a challenge to humanity’s capacity to control its own creation and, consequently, perhaps also to its capacity to be in charge of its own destiny and to ensure the survival of the species.”

And I don’t know about you, but I’d much rather fight zombies than robots.

Image courtesy of TriStar Pictures